Tian said many teachers have reached out to him after he released his bot online on Jan. 2, telling him about the positive results they've seen from testing it.

More than 30,000 people had tried out GPTZero within a week of its launch. It was so popular that the app crashed. Streamlit, the free platform that hosts GPTZero, has since stepped in to support Tian with more memory and resources to handle the web traffic.

How GPTZero works

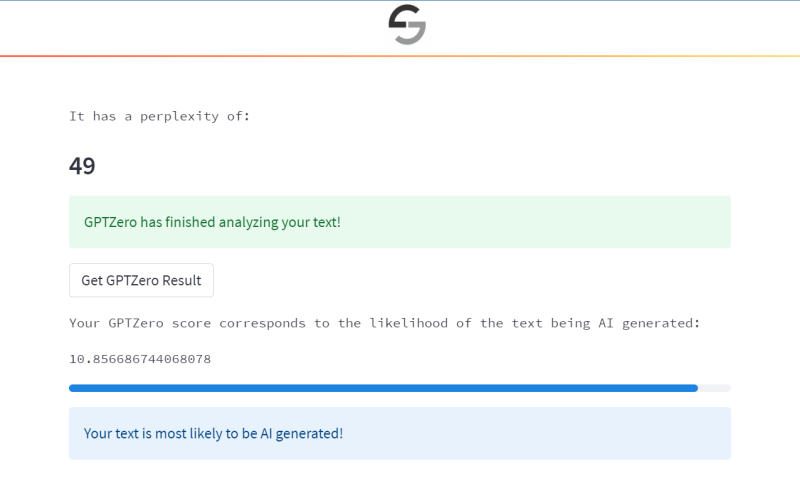

To determine whether an excerpt is written by a bot, GPTZero uses two indicators: "perplexity" and "burstiness." Perplexity measures the complexity of text; if GPTZero is perplexed by the text, then it has a high complexity and it's more likely to be human-written. However, if the text is more familiar to the bot — because it's been trained on such data — then it will have low complexity and therefore is more likely to be AI-generated.

Separately, burstiness compares the variations of sentences. Humans tend to write with greater burstiness, for example, with some longer or complex sentences alongside shorter ones. AI sentences tend to be more uniform.

In a demonstration video, Tian compared the app's analysis of a story in The New Yorker and a LinkedIn post written by ChatGPT. It successfully distinguished writing by a human versus AI.

Tian acknowledged that his bot isn't foolproof, as some users have reported when putting it to the test. He said he's still working to improve the model's accuracy.

But by designing an app that sheds some light on what separates human from AI, the tool helps work toward a core mission for Tian: bringing transparency to AI.

"For so long, AI has been a black box where we really don't know what's going on inside," he said. "And with GPTZero, I wanted to start pushing back and fighting against that."

The quest to curb AI plagiarism

The college senior isn't alone in the race to rein in AI plagiarism and forgery. OpenAI, the developer of ChatGPT, has signaled a commitment to preventing AI plagiarism and other nefarious applications. Last month, Scott Aaronson, a researcher currently focusing on AI safety at OpenAI, revealed that the company has been working on a way to "watermark" GPT-generated text with an "unnoticeable secret signal" to identify its source.

The open-source AI community Hugging Face has put out a tool to detect whether text was created by GPT-2, an earlier version of the AI model used to make ChatGPT. A philosophy professor in South Carolina who happened to know about the tool said he used it to catch a student submitting AI-written work.

The New York City education department said on Thursday that it's blocking access to ChatGPT on school networks and devices over concerns about its "negative impacts on student learning, and concerns regarding the safety and accuracy of content."

Tian is not opposed to the use of AI tools like ChatGPT.

GPTZero is "not meant to be a tool to stop these technologies from being used," he said. "But with any new technologies, we need to be able to adopt it responsibly and we need to have safeguards."

Copyright 2023 NPR. To see more, visit https://www.npr.org.

9(MDAxOTAwOTE4MDEyMTkxMDAzNjczZDljZA004))

9(MDAxOTAwOTE4MDEyMTkxMDAzNjczZDljZA004))