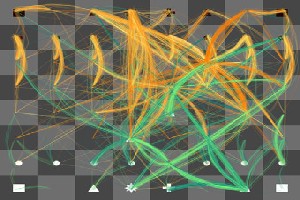

Visualization of possible chess move sequences (try it here)

Visualization of possible chess move sequences (try it here)

Artificial Intelligence has always held a special affinity for games. Chess, in particular, was long considered a realm reserved for exquisite human intelligence: the greatest chess players are called Grandmasters; a large percentage of them are eccentric Russian introverts. Gary Kasparov's defeat, by IBM's specialized supercomputer Deep Blue in 1997, was heralded as a major milestone (he contends the match was unfair). But while the dominance of chess-playing software is culturally significant, does it matter for AI?

Chess, like Checkers, Connect-4, and Go, is a game of perfect information. That is, everything useful for choosing your next move is right there on the board (it would be nice to know what your opponent will do next, but you can assume that your opponent is just trying to make the best possible move too). If you had a computer powerful enough, it could consider every possible next move, every possible response, and so on, and finally deduce, absolutely, how to guarantee a particular outcome. To do this is to solve chess, to answer the question: is it possible for white to force a win? Checkers is solved (both players can force a draw). Connect-4 is solved (the first player can force a win). Chess has too many possible board positions to be solved anytime soon.

Deep Blue can compete with human players by searching many moves ahead, testing all possible combinations, and choosing the next move that leaves its opponent with the worst best option. This approach is called minimax search. Since the computer can't search through to all possible checkmates, it searches to a given depth and scores the resulting board position by the pieces each player still has (roughly speaking, a pawn is 1 point, knights and bishops are 3 points each, a rook is 5 points, and the queen is 8 points). Using this rubric, or heuristic, and searching 10-15 moves into the future, makes for an extremely formidable opponent.

Minimax theory was established by John von Neumann in 1928 and the algorithm was improved in the 1950s and 60s to run more efficiently. Deep Blue contains no general innovation that improves significantly on these now classic techniques. The heuristic for evaluating boards has been refined, and the program has a huge database of well-known openings and end-game sequences-when 5 or fewer pieces are left on the board. Thus, Deep Blue is less a marvel of Artificial Intelligence than of engineering: its success is a direct product of the number of positions it can consider in a second (200 million). This is the Brute Force method of problem solving at its finest.