Ilana Marcucci-Morris [00:01:05] A lot of our members are afraid that it’s going to shift into full-blown therapy, right? That there are going to be new technologies that allow Kaiser to provide, you know, chat-based mental health care.

Ericka Cruz Guevarra [00:01:20] Today, will AI replace your therapist? And why the debate at Kaiser is worth watching.

April Dembosky [00:01:38] Health care is an industry that is usually pretty slow to adopt new technology, but the experts that I talk to say that AI is different.

Ericka Cruz Guevarra [00:01:49] April Dembosky is a healthcare correspondent for KQED.

April Dembosky [00:01:52] Health systems are really excited about the potential that AI has primarily in this moment to improve diagnostics, but also to cut down on paperwork and administrative tasks. So if you go see a medical doctor at Sutter or Kaiser right now, very likely you have been or very soon will be asked if it’s okay for the doctor to use an AI note taker where they will use their cell phone. To record the interaction and then the AI will summarize and write notes for your medical chart.

The Pitt [00:02:29] I have an app on my phone that can listen to our conversation and the details of my physical exam and write it all up in your medical record. Wow.

April Dembosky [00:02:39] If folks out there are watching The Pitt, this actually came up in episode two. So the episode that just came out last week. So there’s a new doctor in the ER and she’s introducing the residents to the concept of AI note takers.

Doctor (The Pitt) [00:02:55] What do you think?

Medical Student (The Pitt) [00:02:56] Well, I don’t think it’s a cardiac.

Doctor (The Pitt) [00:02:58] I mean, what do you think of the app?

Medical Student (The Pitt) 00:03:00] I mean, it’s hard to say without seeing the full thing.

Doctor (The Pitt) [00:03:02] Take a look.

Medical Student (The Pitt) [00:03:04] Oh my God, do you know how much time this will save?

April Dembosky [00:03:07] And so she takes out her cell phone in the exam room and tells a patient, you know, it’s going to record their interaction. And afterward they walk out, they walk over to a computer and the AI has already written a summary of the exam in the patient’s chart.

Medical Student (The Pitt) [00:03:24] Well, excuse me, it says here she takes risperdol and antipsychotics. She takes restoril when needed for sleep, so is that, um…

Medical Student (The Pitt) [00:03:31] AI, almost intelligent.

Doctor (The Pitt) [00:03:34] You must always carefully proofread and correct the minor errors. It’s excellent, but not perfect.

April Dembosky [00:03:44] That’s one of the ways that AI is most present in our healthcare right now. I mean, I have friends in the Bay Area who work in healthcare who, you know, I saw some for dinner a little while ago and you know they said, I am here tonight because of the AI note taker. You know, like because the AI notetaker like did my charting for me, I am able to be here and hanging out with you.

Ericka Cruz Guevarra [00:04:12] Wow, that is so interesting. And yeah, I mean, we’re wrestling with the role of AI in our healthcare and popular culture, but also in real life right now. I know this is a big, big question that is especially relevant for mental healthcare workers in Northern California right now, specifically at Kaiser. Can you explain? April, why this is such a relevant conversation among mental health care workers right now in particular.

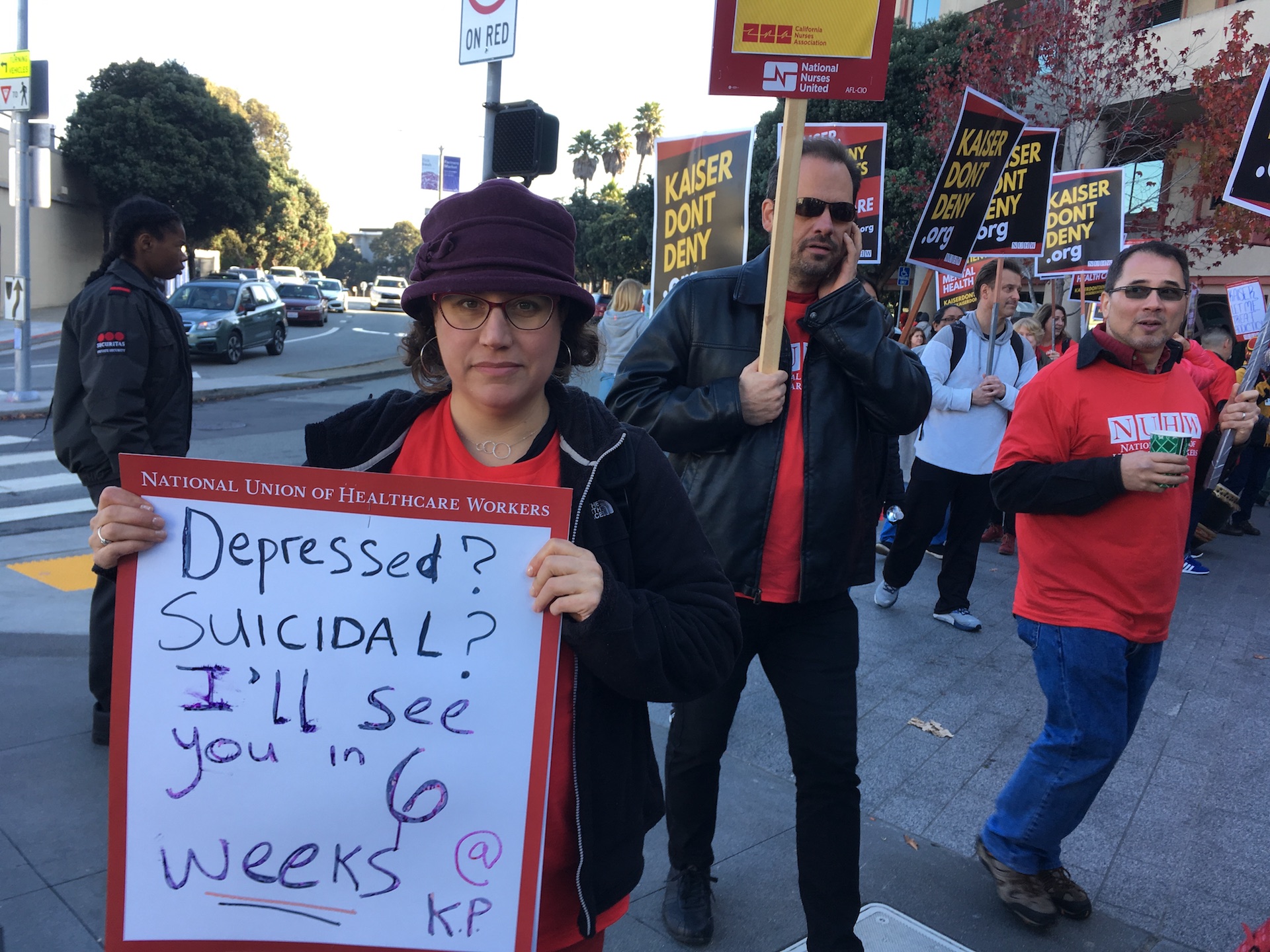

April Dembosky [00:04:44] Sure, so mental health clinicians at Kaiser are in contract negotiations right now. They’ve actually been in bargaining for this next contract for about six months now. So it’s been kind of dragging along. And one of the sticking points is actually around AI. So, mental health workers, they know that AI is here to stay in health care, but when it comes to mental health care they want some simple guardrails. They want to make sure that they are part of seeing that AI is rolled out responsibly in a way that protects patients’ privacy, but also in a ways that protects their own jobs. And so one of the things that they’ve asked for in their contract is language that says specifically any introduction of new AI tools will be used only to assist therapists, but it will not be used to replace them. To them, I think this sounds like a really reasonable ask, but they were really surprised when Kaiser said no.

Ilana Marcucci-Morris [00:06:00] So again, they want flexibility to increase their use of AI.

April Dembosky [00:06:06] I talked to Ilana Marcucci-Morris. She’s a clinical social worker at Kaiser. She works in the intake department, and she’s a member of the union that is bargaining this contract. It’s called the National Union of Health Care Workers, NUHW.

Ilana Marcucci-Morris [00:06:22] When we ask that AI not replace us, they will not put that language in.

April Dembosky [00:06:28] And when I talk to her, she says, I’m a millennial, I love gadgets, I love tools, you know, I get it. We just want some simple protections here.

Ilana Marcucci-Morris [00:06:37] A lot of our members are afraid that it’s going to shift into full blown therapy, right? That there are going to be new technologies that allow Kaiser to not just skip the licensed triage, but to provide, you know, chat based mental health care.

April Dembosky [00:06:54] One of the reasons they’re surprised is because their sister union in Southern California had asked for the same language and Kaiser agreed to it. And that contract was signed last May. And so basically, you know, a month or so after signing a contract that included this language, Kaiser was backing off saying, we don’t wanna commit to that anymore.

Ilana Marcucci-Morris [00:07:20] I mean, they’ll say, no, that’s not our intention. But when we say, hey, can you put that it’s not your intention in the contract? Well, we can’t predict the future. We need to maintain flexibility.

Ericka Cruz Guevarra [00:07:38] So it sounds like these mental health care workers are afraid for their jobs, but some of this technology is already being used. What is it about these tools that they are so concerned about?

April Dembosky [00:07:54] Basically, Kaiser is excited about getting clinicians to use this note-taking software so that it will free them up to see more patients in a day. But clinicians are really worried about this. I think they’re worried about the privacy and data security, where are these recordings going, how long are they kept, how well are they protected, who else can see them. But specifically, I think they’re also really concerned about how this technology could influence the patient interaction.

Ilana Marcucci-Morris [00:08:27] I wouldn’t want a recording of my disagreements with a family member or my trauma.

April Dembosky [00:08:34] And so what Ilana says is talking to your doctor about a fever or a skin condition, it’s really different from talking to your therapist about really vulnerable, really emotional things that are going on in your life. And they’re concerned that patients, if they know they’re being recorded, that it might cause them to hold back.

Ilana Marcucci-Morris [00:09:00] A big part of our work is that human connection and rapport.

April Dembosky [00:09:08] So Kaiser clinicians are basically saying, look, you know, right now this technology is optional for us to use, but we’re really worried that Kaiser is going to, you know, try to force us to use it, perhaps even in clinical situations where we think it could be harmful.

Ilana Marcucci-Morris [00:09:27] Having a human being in your court that is trained and is a professional giving you warmth and encouragement and evidence-based direction is something that technology just can’t replace.

Ericka Cruz Guevarra [00:09:44] AI has already taken roles that people used to have at Kaiser, like doing intake for mental health care. Patients now have the option of doing an e-visit through the app, where you click through a series of questions and the algorithm comes up with a score and recommends where you go next. So far, there isn’t a Kaiser therapist chatbot. Though it hasn’t stopped a lot of people from seeking help for their problems outside of the healthcare system altogether. And April, we’re also in an environment where many people are seeking out mental health help through chatbots, including teenagers. Are patient preferences around this changing as well? And how do mental health experts respond to that?

April Dembosky [00:10:40] In the last few years, we’ve seen a huge rise of consumer facing chatbots and these are not therapists to be clear, but people are starting to use them as therapists. This is a trend that is already taken off because they are available immediately. You can tell them how to interact with you and they are always there. There are clinical psychologists who have. You know, been working on a verified evidence-based, widely tested kind of AI chat bot for therapy for at least six years now. And what they will tell you is it takes a really, really long time to develop a proven product like that, that, you know actually conforms to the standards of delivering therapy.

Jodi Halpern [00:11:33] We cannot institute any of this on a large scale population level without studying it first and making sure it’s safe.

April Dembosky [00:11:42] Jodi Halpern is a bioethics professor at UC Berkeley. Jodi Helpern talks about the potential that chatbots have in cognitive behavioral therapy, which is a particular kind of therapy that tends to be a little bit more formal, a little more formulaic, but she’s very circumspect when it comes to relational therapy.

Jodi Halpern [00:12:04] In the meeting with an empathic human face-to-face, there is the possibility for the patient really to develop trust. And that’s actually a powerful element in improving health outcomes.

April Dembosky [00:12:20] Chatbots are not very good at this, especially consumer-facing chatbots are designed to be affirmative. Sycophantic is the word that experts use. They’re just designed to validate everything you say.

Jodi Halpern [00:12:40] We need more skillful human workforce in the mental health area to meet our unmet needs. We need AI to unburden the skillful human force through ambient medical records and other forms that don’t have to be intrusive or overly privacy invading, but they can take the workload off clinicians.

Ericka Cruz Guevarra [00:13:06] Coming back to Kaiser, April, many of their employees are already using AI. No Kaiser AI therapists as of now, although many of the workers like Alana are worried that there could be. Has Kaiser had any response to this story or any thoughts on AI or contract negotiations that they’ve shared with you?

April Dembosky [00:13:28] Kaiser has not had a lot to say about this. I’ve interacted with them a fair amount asking for interviews multiple times, and they have not been willing to sit down and talk about this, they shared a statement. It says in part that artificial intelligence tools at Kaiser don’t make medical decisions. Our physicians and care teams are always at the center of decision-making with our patients. AI does not replace human assessment and care, but they do see artificial intelligence holding, as they say, significant potential to benefit healthcare by supporting better diagnostics, enhancing patient-clinician relationships, optimizing clinicians’ time, and ensuring fairness in care experiences and health outcomes by addressing individual needs.

Ericka Cruz Guevarra [00:14:23] And I mean, it does seem like consumer trends around AI are one thing, April, but it also seems like these sort of large healthcare systems like Kaiser have a really big role to play in terms of the role that AI could play in the future as well. I mean why do you think it’s important to watch what Kaiser does from here?