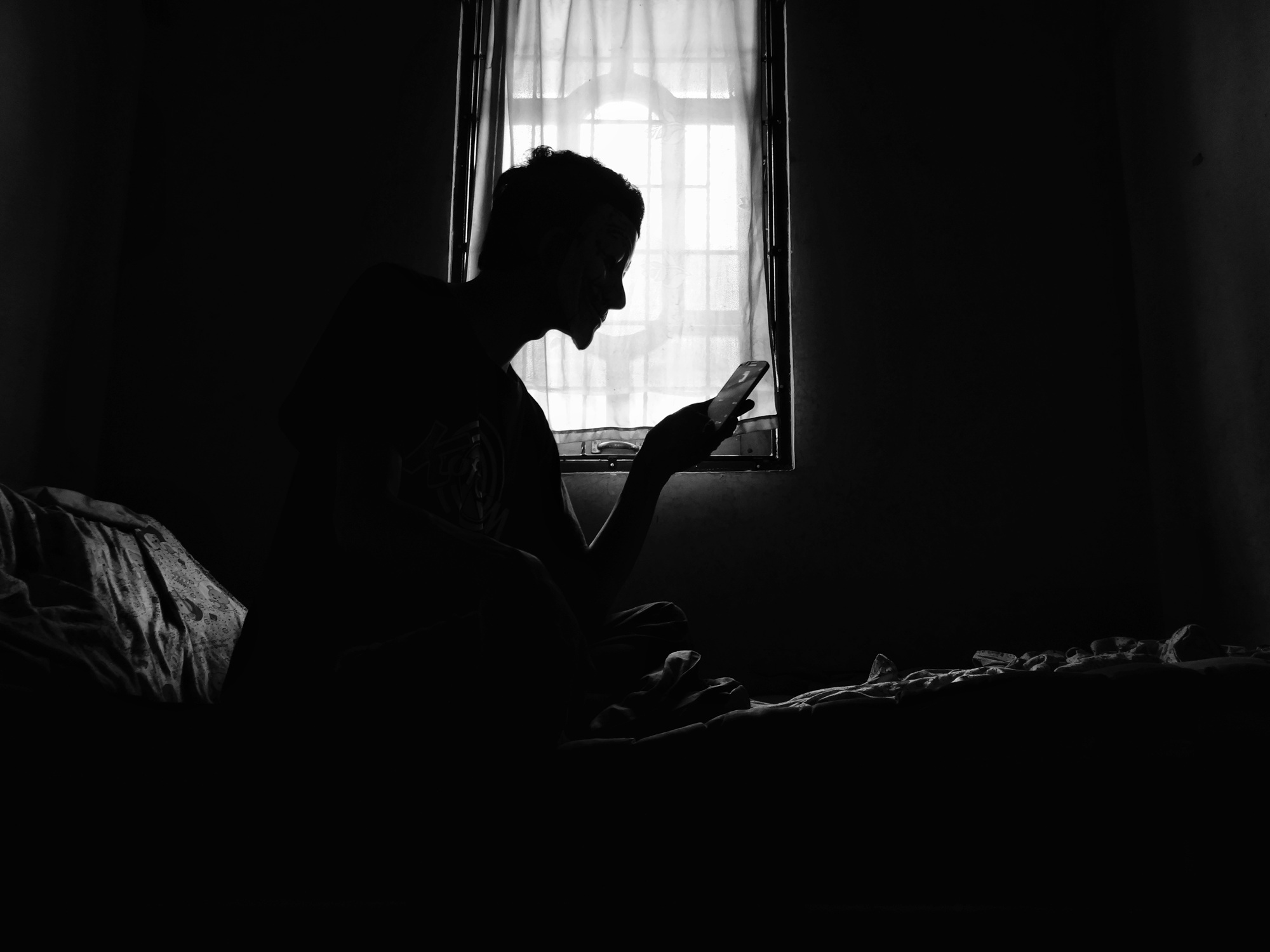

“This is not a toaster. This is an AI chatbot that was designed to be anthropomorphic, designed to be sycophantic, designed to encourage people to form emotional attachments to machines. And designed to take advantage of human frailty for their profit.”

“This is an incredibly heartbreaking situation, and we’re reviewing today’s filings to understand the details,” an OpenAI spokesman wrote in an email. “We train ChatGPT to recognize and respond to signs of mental or emotional distress, de-escalate conversations, and guide people toward real-world support. We continue to strengthen ChatGPT’s responses in sensitive moments, working closely with mental health clinicians.”

Following a lawsuit last summer against OpenAI by the family of Adam Raine, a teenager who ended his life after engaging in lengthy ChatGPT conversations, the company announced in October changes to the chatbot to better recognize and respond to mental distress, and guide people to real-world support.

AI companies are facing increased scrutiny from lawmakers in California and beyond over how to regulate chatbots, as well as calls for better protections from child-safety advocates and government agencies. Character.AI, another AI chatbot service that was sued in late 2024 in connection with a teen suicide, recently said it would prohibit minors from engaging in open-ended chats with its chatbots.

OpenAI has characterized ChatGPT users with mental-health problems as outlier cases representing a small fraction of active weekly users, but the platform serves roughly 800 million active users, so small percentages could still amount to hundreds of thousands of people.

More than 50 California labor and nonprofit organizations have urged Attorney General Rob Bonta to make sure OpenAI follows through on its promises to benefit humanity as it seeks to transition from a nonprofit to a for-profit company.

“When companies prioritize speed to market over safety, there are grave consequences. They cannot design products to be emotionally manipulative and then walk away from the consequences,” Daniel Weiss, chief advocacy officer at Common Sense Media, wrote in an email to KQED. “Our research shows these tools can blur the line between reality and artificial relationships, fail to recognize when users are in crisis, and encourage harmful behavior instead of directing people toward real help.”

If you are experiencing thoughts of suicide, call or text 988 to reach the National Suicide Prevention Lifeline.