The draft regulations would require businesses to assess and report privacy risks, perform annual cybersecurity audits, and give consumers more control over how automated systems (like AI and profiling tools) use their personal data. Public comment for the draft regulations closed on February 19. The board discussed those comments at the April board meeting, and they’ll discuss again on May 1.

The broad scope of the conversation brought out a fulsome array of interested parties, including not just the governor, but industry lobbyists and consumer advocates as well.

“AI, social media and data privacy are fundamentally intertwined, and if we are going to protect consumers and our democracy, from these combined, interwoven threats, you have to be talking about all of them all at once,” Stein said. “Right now, social media and AI are almost totally unregulated.

“California has made some good starts on data privacy in some recent bills in recent years, but there is almost no industry I can think of that has an impact on our lives so enormous, and sits under a regulatory regime so light and so minimal.”

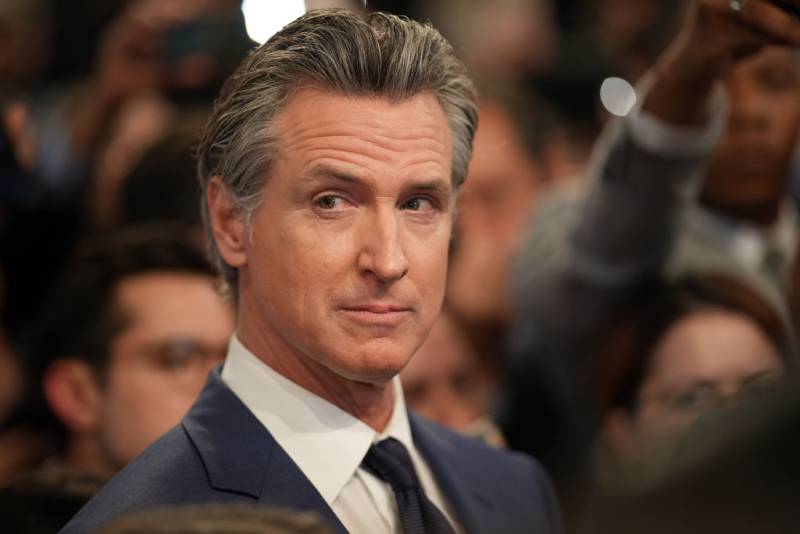

Newsom has a reputation in Sacramento for lending a friendly ear to industry concerns. He has killed a couple of the most controversial AI bills, like one that would have required large-scale AI developers to submit their safety plans to the state attorney general, and two that would have forced tech platforms to share ad revenues with news organizations.

However, Newsom has also signed many bills that consumer advocates like, addressing everything from online privacy to critical infrastructure.

At a board meeting three weeks ago, CPPA Board member Alastair Mactaggart worried that moving forward aggressively could trigger industry lawsuits designed to bury the agency’s small staff in paperwork.

Or Silicon Valley lobbyists might appeal to President Donald Trump and the Republican-controlled Congress to preempt California’s privacy protections with weaker federal rules. However, it’s not clear how friendly that audience would be, given the federal government’s continued aggressive legal assaults against Google and Meta.

“Rules around artificial intelligence are really a part of privacy law, because they govern the control that people should have over the use of information about them, and the use of that information that affects people’s lives,” said Snow, urging the board to move forward on “common sense restrictions on this technology.” What defines “common sense,” however, is a matter of continued debate.

The CPPA board has a November deadline to finalize the rules.