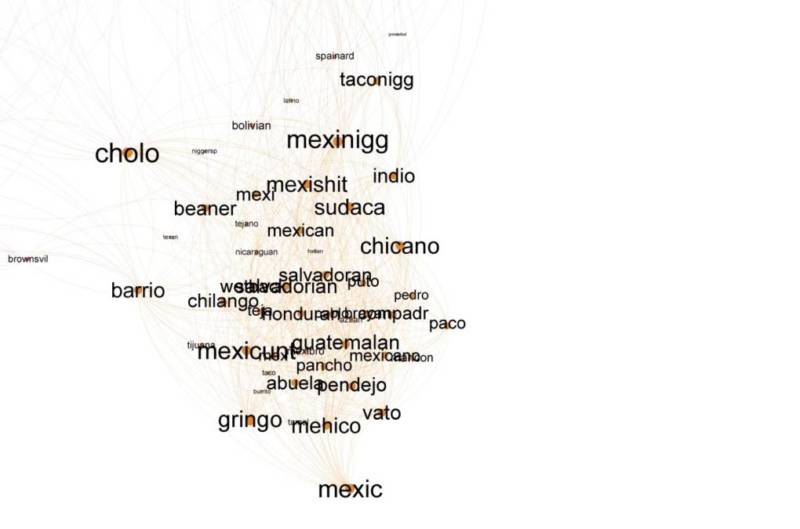

Note: this article’s visual assets contain offensive language.

Mass shootings have become all too common in America, with attacks weekly, if not daily, now. In the wake of Gilroy, Dayton and El Paso, it’s worth noting that the frequency of these attacks was predicted a year ago by a man who studies hate speech on social media.

Joel Finkelstein, director of Network Contagion Research Institute, says the attacks may seem random, but they’re not. “They’re not coming out of nowhere,” he said.

Finkelstein’s institute uses machine learning tools to identify, track and expose hate speech online, drawing from mainstream as well as extremist communities. The typically young, white, male gunmen more often than not reference language seen on platforms like 4chan, 8chan and Gab.

Finkelstein said these platforms need to be held legally accountable, perhaps by the families of those killed in mass shootings, who could sue platforms over their willingness to host conversations inciting readers to violence.

Recall what Matthew Prince, CEO of Cloudflare, wrote as he announced that the San Francisco-based web infrastructure company terminated its support services for 8chan earlier this week: “The rationale is simple: they have proven themselves to be lawless and that lawlessness has caused multiple tragic deaths.”

8chan has been unreachable since Cloudflare cut its ties. But 4chan, home of the message board Politically Incorrect, where the words in the graphic above were pulled from, is still up and running. Should that platform be taken down, it’s not difficult to imagine another entity, perhaps overseas, hosting something like 16chan.