If your best friend or your mom gives you a call on the telephone, you probably know who it is right away. You trust that if you hear their voices, it could only be them. Well, that could soon change. A new company called Lyrebird is working on software that can copy someone’s voice and make it say anything. Listen to this.

Coming Soon: Software That Can Perfectly Imitate Your Voice

Coming Soon: Software That Can Perfectly Imitate Your Voice

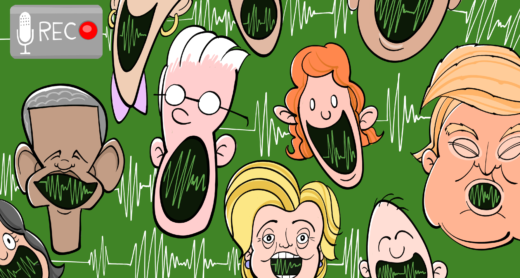

This is an entirely fake conversation Lyrebird made and posted on its website as an example of what its software can do. The technology is far from perfect. Voices still sound robotic and disjointed. Still, if you listen to this audio sample, you can most likely identify who the software is copying: former President Barack Obama and President Donald Trump. The fact that it can get this close raises questions about what will happen in the not-so-distant future.

According to Lyrebird, a minute-long recording of a voice is all it takes to start replicating it. While it sounds robotic now, they say the software will soon get much better. As more data are fed into the company’s algorithms, they teach themselves how to sound more human. It’s machine learning, which means there could be exponential improvement, said Steve Weber, professor at UC Berkeley’s School of Information.

“What sounds like close today, in a year could sound perfect or fundamentally indistinguishable, at least by the human ear,” Weber said.

He said if Lyrebird doesn’t reach that point, someone else soon will.

“I am confident that it is being done in government and non-commercial realms,” Weber said. “And it has been worked on for some time, I would expect.”

If the technology is perfected it would open that old Pandora’s box. There would be the potential for all kinds of fake news, impersonations and scams. People could synthesize a recording of a powerful figure, like Trump, saying anything. Anything.

Remember “The War of The Worlds,” Orson Welles’ 1938 radio drama where fake journalists reported an alien invasion? Imagine how scary that would be if someone inserted the voices of presidents into the drama, and instead of a War of the Worlds, it was a war between countries.

Jose Sotelo is one of Lyrebird’s three founders, all computer science Ph.D. students at the University of Montreal. He realizes their technology could be dangerous, which is actually part of the reason they are developing and planning to release it.

“We believe that we can really mitigate the problems by making it public in the same way that right now, if you see an image of myself standing on the moon, you’re likely to think this image is fake because you know the existence of image-editing software,” Sotelo said.

While admittedly scary, Sotelo said the company’s voice replication technology has noble applications. It could bring back the voices of cancer patients who can no longer speak, or of a deceased loved one, which is an interesting idea but could also be a bit unsettling. Regardless of how you feel about positive uses, Sotelo said someone has to show the world that voices can be copied.

“It is not a really comforting solution, not being able to trust audio anymore,” Sotelo said, “but we believe this is basically the only solution available.”

Lyrebird plans to make its software available for other developers to use, and the founders said they want to be public about the capabilities of their technology.

Just because Lyrebird is putting the work out in public, does that absolve the company from potential misuses? Is it ethical to further this technology and release it to the world? Lots of tech companies try to sidestep ethical questions by being transparent, said Deirdre Mulligan, a professor and director at UC Berkeley’s Center for Law and Technology.

“I think people often think about transparency as some magic pixie dust,” Mulligan said. “So we’re going to be transparent about what we’re doing and therefore somehow the public is going to be knowledgeable, and the assumption is they are going to engage in some kind of self help. What kind of self-help are you going to do?”

We would need to learn to live in a world where voices can be copied and can’t be trusted — where just because it sounded like your mother or friend or president said something, it won’t mean they actually did.

That world could take people a long time to get used to.