Last week Eric Yuan, CEO of the now exhaustingly ever-present video conference company Zoom, told investors that Zoom would not offer encryption services to free users. Why? Because of Zoom’s commitment to supporting and aiding the FBI and local police officers.

As social distancing requires unprecedented reliance on the internet, and the ongoing Black Lives Matter protests bring people’s attention to the importance of digital security, Zoom’s decision is a reminder that technology is never neutral.

Last week Rafael Lozano-Hemmer, a digital artist, posed a simple but fundamental question in regard to the unfolding political movement: “How can I be useful as an artist?” Many contemporary artists are critically engaging with technology to answer that question. Here are five artist-made tools that support protests on the ground, resist surveillance’s biased gaze, fight back against social alienation and combat technologies imbued with anti-Blackness.

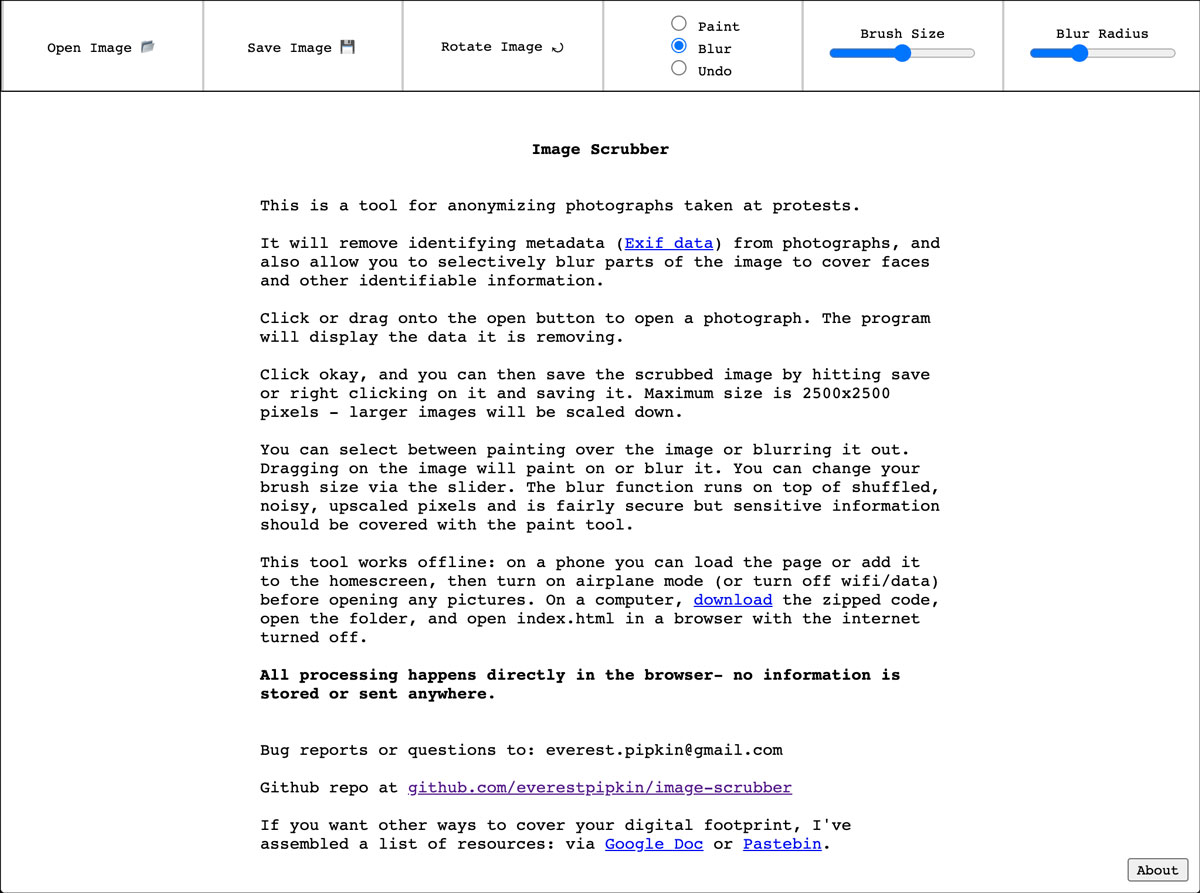

Image Scrubber

Artist Everest Pipkin’s Image Scrubber removes metadata from digital photographs and allows users to selectively blur faces and other identifiable features. With so much concern over images of protests potentially being used to make arrests, this tool protects the identities of those fighting for change, while still leaving space for the documentation of their actions.

While many of the artist’s other projects are more poetic in nature, Pipkin designated this particular technology as a tool, not as a piece of art. This decision is reflected in Image Scrubber’s practicality: it works offline, can be accessed on a phone, and does not save or store any user information.

Autonets

To empower communities that regularly face systemic violence, micha cárdenas, an artist, poet and professor at UCSC, designed wearable mesh-networked electronic clothing, which she calls Autonets, that radiate private communication servers. When groups of people wear these pieces of electronic fashion they join ad-hoc autonomous communities, within which they can set their own communication codes and conducts.